Unchecked language in online groups can drive users away, harm brand reputation, and cause regulatory issues. According to the Anti-Defamation League’s 2023 survey, 52% of online users reported experiencing harassment due to toxic behavior. These issues are not limited to any single platform or audience.

Why Online Spaces Demand Profanity Management

To reduce risk, online spaces need more than reactive or piecemeal bans on offensive words. A consistent, scalable approach to profanity management supports safer interactions and helps platforms avoid harmful trends.

Pros and Cons of Manual vs Automated Profanity Detection

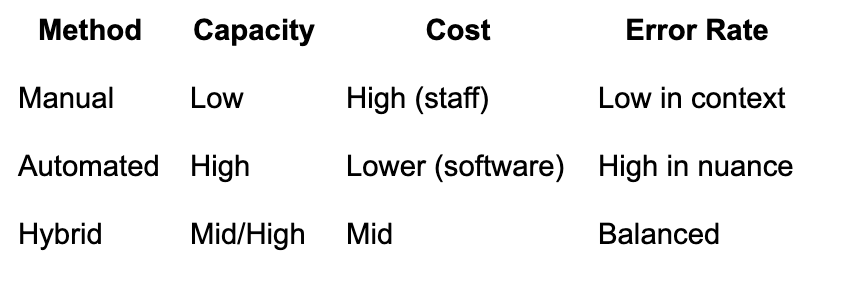

Human moderators understand context and subtle meanings. They handle sarcasm, slang, and language evolution with more accuracy. Automated systems, on the other hand, process large volumes of content quickly and consistently, but they may misinterpret context.

Here’s an at-a-glance comparison:

For mid-sized and large online communities, a hybrid approach combines the strengths of both systems. Automated filters flag most issues, while human moderators review edge cases and appeals.

Implementing Robust Profanity Filtering Techniques

Build a flexible profanity system by:

Customizing blocklists to reflect your audience and platform goals

Using pattern matching (for masked or altered spellings)

Applying context-aware algorithms to reduce false flags

Online language changes fast, so updating filters matters. If new slang appears, add it and its variations to your blocklist. For example, if “ph*k” starts trending, list both it and common misspellings.

Select tools with strong adaptability. A well-established profanity filter can handle new words, misspellings, and even contextual clues to improve accuracy.

Fine-Tuning Your Profanity Screening Settings

Adjusting sensitivity levels helps balance strictness and user experience. High thresholds catch more abuse but may flag harmless jokes. Low thresholds may miss offensive messages.

Set up user feedback channels and appeals processes. Allow users to report mistakes or explain context. Use their input to update lists or train detection models.

Regularly refresh your blacklists and update your filtering algorithms. This helps catch newly coined slang or changes in how words are used.

Measuring the Success of Your Content Moderation Filters

Track performance using clear metrics:

Detection rate of actual policy violations

Number of false positives and negatives

User satisfaction and complaint rates

Set up dashboards to monitor these numbers. Schedule audits to ensure the filter stays accurate and effective.

Share your moderation guidelines and appeal procedures with users. This builds trust and gives users confidence in your process.

Beyond Censorship: Fostering Lasting Digital Respect

Strong moderation is about more than blocking bad words—it supports healthy, open discussion for everyone. Keep reviewing your policies, monitor how well your tools perform, and listen to your community.

Stay flexible as language shifts. Use feedback and data to improve. Lead the way in promoting courteous conversation without silencing honest voices.